SHOPS & SPOTS PROJECT

This is Shops & Spots, one of my most challenging projects. You can make use of this to explore different places in and around Manchester in the form of beautiful, creative videos, which are sure to take you on a journey to really discover amazing locations in the area.

All sound design is created by yours truly.

STAR CRAFT II GAME TRAILER

This report examines the design and delivery of mixed sound content for the cinematic of the Star Craft II game trailer. This was done by replicating original material found in ‘Star Craft II’ (original version), ‘Star Wars- The Force Unleashed’, ‘Dead Space- Launch’, ‘Star Citizen Gameplay Trailer’ and a few others. Throughout the paper, a variety of technical and creative strategies are critically analysed relating to digital tools, acoustic principles and recording techniques in producing the sound content.

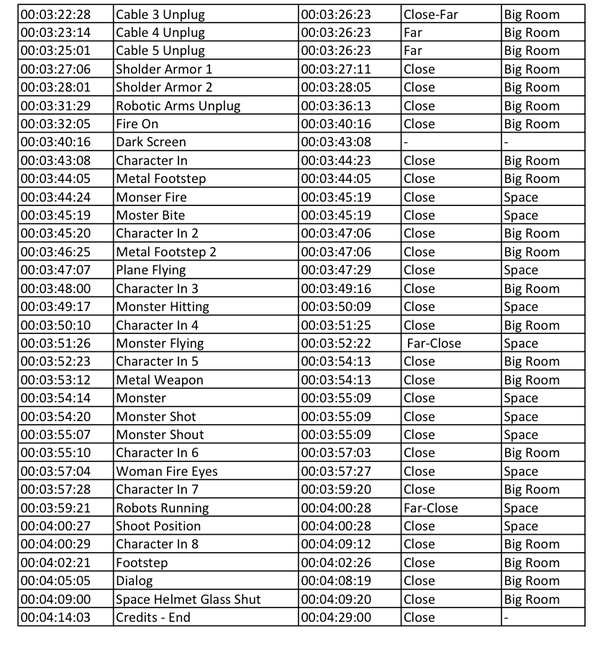

The first step in the pre-production phase was careful analysis of the original game trailer in order to distinguish the diegetic and non-diegetic sounds. A list of these sounds can be found in the cue-sheet (see Figure 01). In order to better analyse the cinematic, the sound was turned on and off so as to identify the desired approach to the sound content, while still taking into account the original material.

Figure 01

The Pro Tools markers feature simplified the management of the audio as well as positioning clips faster. Making use of the Sample Library was essential in testing out the ideas and exploring them within the session. Furthermore, the different tracks were organised in Groups for improved workflow and organization.

Samples were first trimmed, time stretched and synchronized with the animation. Following this, a synthesizer instrument was applied where creation of new sounds was needed due to the fictional nature of the cinematic that does not allow acquiring the authentic audio from its original form and source. Using Massive synthesizer, a real-time automation was applied on the Colour of a White Noise and then separately of a Metal Noise. The sound was also affected by a high pass Filter in order to obtain the wanted timbre (See Figures 02 and 03).

Figure 02

Figure 03

Foley was recorded with the purpose of enhancing the sound but was not used in the end, as it did not provide the desired results. The dialogue at the end is a pre-defined vocal recording, which was captured in the D-Control studio with a Neumann U87.

The dialogue scene at 00:03:29:17 was created in order to suggest that the spaceship is an automaton assisting the main character. The spaceship was converted into a character through the accapella female vocal. Although this is not featured in the video content, the sound was used to emphasise a close connection between the main character and the spaceship.

Making use of the buses was essential within the post-production stage. Each group of tracks was sent to an Auxiliary Track, which was used as a submix and allowed balancing the levels between the groups more accurately (Hurtig, 1988). Furthermore, this technique was used to diminish the chances of a possible overdrive of the processor, as the number of the tracks is considerable. Additionally, another bus was used for reverberation, in order to recreate virtual spaces (Smithers, 2010).

The following environments are presented within the cinematic: the assembly room inside the spaceship, the dark room in the beginning of the trailer and the outside fictional spaces. A large hall reverb was used to emulate the virtual environment within the spaceship assembly room. The dark room was portrayed as small and claustrophobic, so a large plate reverb was used, not so wet, as the source is close to the listener. For the outside spaces, the reverberation was unnecessary, as they were created layering up different atmospheric samples, with a few of them already containing reverb.

Equalization was mainly used to achieve a better mix separation (Swallow, 2011). Moreover, it served to make the animation more credible, as the amount of frequencies usually changes with the change in distance and position of the source. This was made using non-real-time automation on the Master Bypass at certain points where the sound perspective was changing.

The compression was mostly used as a side-chain to emphasise the robotic footsteps while compressing the ambiance and the other sound effects. The same technique was applied to the heartbeats FX to give a more dramatic emotion to the sequence through low frequencies.

The panning technique involved real-time automation and aimed to give dynamics to the animation as well as to enhance the mix space (White, n.d.).

The purpose of the soundtrack was to create an emotional cinematic which captures the viewer into a world where battle is about to begins. In order to portray this mood, instruments generally used in military contexts were used. According to Vienna Symphonic Library (n.d.), the timpani or kettledrum were played in ancient times for announcements of triumphal marches and processions. For the soundtrack, this was accompanied by other percussion instruments, which together with the bass set the rhythm. Additionally, the brass, strings and the flute were added to the mix in order to simulate an adrenaline rush and further picture a battlefield (See Figures 04 and 05).

Figure 04

Figure 05

To conclude with, producing the sound of Star Craft II game trailer was a constructive challenge on which new skills and knowledge have been developed. Although it provides the desired result, reflecting back, it might have been better to experiment more with recording Foley, which can be set as a future recommendation in order to improve sound accuracy.

THE TOWER DEFENSE GAME LEVEL PROJECT

The project started with playing the original Tower Defence game, while paying attention to the interactive audio sounds and their acoustic characteristics. Following this, a Spotting Session was shaped, where the assets list with the sounds from the game level was created as well as the list with things that can be used in order to produce those sounds (See Figure 01). The next phase involved accessing the Sample Library and finding appropriate sounds in order to use them further within the project. The audio files were then edited in Pro Tools and bounced in Mono versions with the required file formats (44.1 kHz Sample Rate at 16 Bits) and naming protocols. This seemed to be crucial to how the game engine processed the content, at a later stage, when implemented assets into the game engine. Moreover, folders were created and named accordingly in order to improve the workflow. Organisation forms the base of successful projects.

Figure 01

At this stage, Foley was used as a technique to record certain sounds effects, such as the gold, when it spawns, disappears or it is picked up. Voiceovers were recorded for the Characters, especially on the parts where the minions attack or die. This was done in order to make the game more realistic. A Neumann U 87 was used for the recordings due to its unique frequency and transient response characteristic (See Figure 02).

Figure 02

The low frequency interference was reduced at the input of the microphone amplifier by using its cutoff frequency additional switch. This decision was taken in order to leave space for other sounds to fit better in the mix.

The Izotope RX4 Denoiser and Dialogue Denoiser were used from the AudioSuite toolbar in order to get rid of the unwanted noise that was captured through the recording phase. Even though the sound effects were recorded all at once, in the same live room, each individual recording was denoised separately due to its difference in frequency range spectrum (See Figures 03 and 04).

Figure 03

Figure 04

At this point, the desired sounds were collected and therefore, imported into Pro Tools in order to be edited. Equalisers were used for every sound in order to split the frequencies accordingly and make them fit better in the mix space (See Figure 05). Furthermore, Fades were used to prevent the clicks and pops from the original recording (See Figure 06).

Figure 05

Figure 06

The arbalest shot sound was done using multiple arrow samples that were different in pitch and timbre. After layering them at dissimilar intervals in order to create the sound of three arrows shot, a Limiter was applied for the sake of compressing the sounds at a good volume level and not having a distorted sound as a result.

Moreover, the D-Verb plugin was used with the purpose of fitting better with the picture by adding reverb to the sound (as the action takes place underground, in a cave) (See Figure 07). The Reverb and Limiter were applied to the Arbalest Projectile Hit Flesh too as well as for the Arbalest Projectile Hit. These sounds were approached similar when build: they were layered as several samples making a similar impact-like sound – such as hitting a cabbage, an egg, a watermelon, and a tomato were layered appropriately, producing a sensible sounding effect (See Figure 08).

Figure 07

Figure 08

The desired Cannon Shot sound was made by layering several cannon shots that were different in terms of timbre and pitch. Moreover, a Reverb was used to create realistic space as well as a Limiter for the sake of attenuating the transients while keeping the dynamics at the same time (See Figure 09).

Figure 09

The Building Construction Start sound was created by stacking sounds one on top of the other. There are multiple hammers sounds that were different in pitch and timbre. Due to the 8-9 seconds length of the Sound Cue, the sounds were cut, synchronised by transients and bounced having that precise length in order to be synchronised with the action within the game.

Moreover, for the sake of making the overall sound of construction more realistic, a Handsaw Sawing Wood sound was added as a layer. Furthermore, in order to add variation to the building construction process, a Dropping Gravel Hiss sound was added to the Building Attachment Added sound cue (See Figure 10).

Figure 10

Different techniques were used to create the Gold sounds. A more prominent Delay effect was used for the sound where the gold is picked up.

Moreover, for the sound where the gold disappears, the desired result was achieved by reversing the original sound. The idea behind this was to produce a more accurate distinction between the sounds so as to give dynamism to the game and make it more interactive for the player (See Figures 11 & 12).

Furthermore, a Delay was used within the game level for the sound cue where the gold disappear in order to delay the sound and synchronise better the audio with video.

Figure 11

Figure 12

The ambience sound was used to gently surround from the distance at all times. Three different Sound Cues were used in order to enhance the sound of the wind.

Moreover, a Lava Idle Sound Cue is present in the game level as a Background, which was made by layering and pitching multiple water samples.

Also, the Pitch plug-in was used to bring down the pitch of my voice in order to create minion voices (See Figure13).

Figure 13

In the end, Modulation was used for most of the Sound Cues in order to add variation through setting up different pitches. As a result, the overall sound is more diverse and the game more interactive.

Moreover, Attenuation was used due to its ability to lower a sound in volume as the player moves away from it. This proved to be effective especially because it adds another kind of variation.

Usually, the Attenuation has a radius, which is the loudest area around the sound and the falloff distance, where the sound becomes quieter smoothly (See Figure 14).

Figure 14

THE ALMA ANIMATION PROJECT

This is a log that aims to explain, evaluate and reflect on the sound assets required to complete the sound design task. The log will cover the planning, pre-production, recording and mixing stages. In addition, a collection of techniques used in the project will be analysed and evaluated further in this paper.

Planning

The planning stage started with assessing the requirements to undertake the project. This included choosing the video sequence that the team would create sound design for, securing access to the studios, choosing methods of communication within the group and meeting dates. Moreover, the team also chose a range of data storage, which includes network, memory sticks, external hard drives and SD memory cards.

The team chose the video entitled ‘Alma’ because it presented a great opportunity to create an imaginary world and share emotions through sound design. Therefore, this work is inspired by the original ‘Alma’ video, which can be found on YouTube.

Pre-Production

The first step in the pre-production stage was a team brainstorm to recognise the diegetic and non-diegetic sounds. The idea was to mix these together in a smooth way, in order to introduce the audience in Alma’s imaginary world. As a result, the team identified the diegetic sounds that were further recorded and edited in the next phases using the Foley technique. The non-diegetic sounds included specific words and phrases with an emotional meaning. ProductionOnce the session was created in Pro Tools, the team was able to transfer the results of the previous brainstorm and research into the software.

The team used markers, which favoured easier management of the audio as well as positioning clips instantly. Furthermore, the team made use of the sample library, which was constructive in testing out the ideas and experimenting with the animation. Research on simulating sounds was undertaken, such as creating footsteps in the snow using cornstalks and creating the wind by recording a specific whistle blowing.

Recording

The recording phase started with simulating the sounds that have a source within the story world and was done indoor, in the studio as well as outdoors. The latter was achieved using a Zoom H4n recorder and different condenser microphones. There are three types of environments within the moving picture: the city, the shop and the puppet.

The city was constructed as a cyclic ambient both in the beginning and end of the video, through the Christmas Market and the church bell. To enhance the sound of the wind and make it more realistic, an Oscillator containing white noise was used.

For the shop environment, a room tone was recorded, whereas within the puppet there is no room tone, as the purpose was to create a claustrophobic atmosphere using audio effects on Alma’s breaths at a later stage. Multiple sound effects were used. The organic sounds were used to add to the authenticity of the video.

Moreover, external sound effects added dramatic significance through expression of the character’s inner thoughts and help the audience to understand the meaning of the scene. A Theremin was used to create anticipation as well as fear when Alma is reaching towards the doll.

The score composing started at this stage, after the samples were replaced within the session with the Foley recordings, sounds effects and atmosphere. The music is in ¾, which resembles the rhythm of the human heartbeat. Its purpose is to set the mood of the video and create an emotional connection with the audience.

The soundtrack is a mixture between a nursery and a Christmas song that aims to emphasise Alma’s childish nature to the listener. Throughout the piece, the music has different intensity, timbre, pitch, speed, shape and organisation in order to highlight a contrast between the different moods aimed to be invoked in the audience.

Mixing

The first step in the mixing stage was adjusting the sound levels and the panning in order to synchronise the tracks with the animation. Different types of compression and equalisation were performed to achieve resemblance to the acoustic characteristics of the elements in the video.

Automation and audio effects (delay, reverb) were used to enhance the characteristics of the spaces from the picture. The sound made by the shop door when opening is louder because loud is a signature of power, and the wish was to emphasize the dominance of the shop, which may be seen as a character within the piece.

Conclusion

The mix was checked in mono as well as in stereo in multiple studios as well as headphones. This was to ensure its transferability between different listening environments.

The techniques used came as a support to inspire a joyful Christmas in the beginning, which turns out to be sad in the end.

As the purpose is to keep the audience under tension, the main objective of the video was to transpose the listener in Alma’s world, which was successfully achieved through sound design. However, critically analysing the sound design process, one recommendation for the future would be creating an organised cue sheet in order to understand the process better and to improve the workflow.